Welcome to the Data Analytics and Machine Learning using Python Bootcamp at MSRIT

The Data Analytics and Machine Learning using Python bootcamp is intended to give you a flavor of many aspects of Data Science and Machine Learning with the goal of inspiring you to go on your own learning journey.

In this bootcamp we will cover the following;

- Introduction to Python programming

- Data Analysis

- Data Visualization

- Machine Learning

- How to Keep Learning

Follow me on LinkedIn where I share updates and suggestions for early-career Data Science/Machine Learning professionals and students looking to gain a headstart in this field.

Essential Data Science Skills

Data Science Technical Skills

A data scientist may require many skills, but what sets them apart is their technical knowledge. Many technical skills and knowledge of specialized tools will be essential for data scientists to be familiar with but there exists a core set of of technical knowledge that can be applied to a majority of problems across doamins.

Data scientists use programming skills to apply techniques such as machine learning, artificial intelligence (AI) and data mining. It is essential for them to have a good grasp on the mathematics and statistics involved in these techniques. This allows a data scientist to know when to apply each technique.

In addition to understanding the fundamentals, data scientists should be familiar with the popular programming languages and tools used to implement these techniques.

Data Visualization

Data visualization is an essential skill to acquire for a data scientist. Visualization enables the data scientist to see patterns and guide their exploration of the data. Second, it allows them to tell a compelling story using data. These are both critical aspects of the data science workflow.

Programming/Software

Data scientists use a variety of programming languages and software packages to comprehensively and efficiently extract, clean, analyze, and visualize data. Though there are always new tools in the rapidly changing word of data science, a few have stood the test of time.

Below are a few important and popularly used tools that aspiring data scientists should familiarize themselves with to develop programming and software data scientist skills:

R

R was once confined almost exclusively to academia, but social networking services, financial institutions, and media outlets now use this programming language and software environment for statistical analysis, data visualization, and predictive modeling. R is open-source and has a long history of use for statistics and data analytics

Python

Python, unlike R, was not primarily designed for data analysis. The pandas python library was created to fill this gap. Python has gained in popularity with a very extensive ecosystem of tools and libraries for all aspects of the data science workflow in addition to be used for software engineering tasks.

Tableau

Tableau provides a high-level interface for exploring and visualizing data in friendly and dynamic dashboards. These dashboards are essential at organizations that prioritize data-driven decision making.

Hadoop

Hadoop is an open-source software framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. Hadoop offers computing power, flexibility, fault tolerance and scalability. Hadoop is developed by the Apache Software Foundation and includes various tools such as the Hadoop Distributed File System and an implementation of the MapReduce programming model.

SQL

SQL, or Structured Query Language, is a special-purpose programming language for managing data held in relational database management systems. There are multiple implementations of the same general syntax, including MySQL, SQLite and PostgreSQL.

Some of what you can do with SQL—data insertion, queries, updating and deleting, schema creation and modification, and data access control—you can also accomplish with R, Python, or even Excel, but writing your own SQL code could be more efficient and yield reproducible scripts.

Apache Spark

Similar to Hadoop, Spark is a cluster computing framework that enables clusters of computers to process data in parallel. Spark is faster at many tasks than Hadoop due to its focus on enabling faster data access by storing data in RAM. It replaces Hadoop’s MapReduce implementation but still relies on the Hadoop Distributed File System.

Statistics/Mathematics

Today, it is Software that run all the necessary statistical tests, but a data scientist still needs to possess the sensibility to know which test to run when and how to interpret the results.

A solid understanding of multivariable calculus and linear algebra, which form the basis of many data science and machine learning techniques, will allow a data scientist to build their understanding on strong foundations.

An understanding of statistical concepts will help data scientists develop the skills to understand the capabilities, but also the limitations and assumptions of these techniques. A data scientist should understand the assumptions that need to be met for each statistical test.

Data scientists don’t only use complex techniques like neural networks to derive insight. Even linear regression is a form of machine learning that can provide valuable learnings. Simply plotting data on a chart and understanding what it means are basic but essential first steps in the data science process.

Mathematical concepts such as logarithmic and exponential relationships are common in real-world data. Understanding and applying both the fundamentals as well as advanced statistical techniques are skills that data scientists need to find meaning in data.

Though much of the mathematical heavy lifting is done by computers, understanding what makes this possible is essential. Data scientists are tasked with knowing what questions to pose, and how to make computers answer them.

Computer science is in many ways a field of mathematics. Therefore, the need for mathematical data scientist skills is clear.

Data Scientist Soft Skills

Data science requires a diverse set of skills. It is an interdisciplinary field that draws on aspects of science, math, computer science, business and communication. Data scientists may benefit from a diverse skill-set that enables them to both crunch the numbers and effectively influence decisions.

Because data scientists focus on using data to influence and inform real-world decisions, they should be able to bridge the gap between numbers and actions. This requires skilled communication and an understanding of the business implications of their recommendations. Data scientists should be able to work as part of a larger team, providing data-driven suggestions in a compelling form. This requires skills that go beyond the data, statistics and tools that data scientists use.

Communication

Data scientists should be able to report technical findings such that they are comprehensible to non-technical colleagues, whether corner-office executives or associates in the marketing department.

Make your data-driven story not just comprehensible but compelling.

One important data scientist skill is communication. In order to be effective as a data scientist, people need to be able to understand the data. Data scientists act as a bridge between complex, uninterpretable raw data and actual people. Though cleaning, processing and analyzing data are essential steps in the data science pipeline, this work is useless without effective communication.

Effective communication requires a few key components.

Visualization allows a data scientist to craft a compelling story from data. Whether the story describes a problem, proposes a solution or raises a question; it is essential that the data be presented in a way that leads the audience to reach the intended conclusions. In order for this to happen, data scientists should describe the data and process in a shared language, avoiding jargon and unnecessary complexity.

Business Acumen

Business awareness could now be considered a prerequisite for effective data science. A data scientist should develop an understanding of the field they are working in before they are able to understand the meaning of data. Though some metrics, like profit and conversions, exist across industries, many key performance indicators (KPIs) are highly specialized. This data makes up the industry’s business intelligence, which is used to understand where the business is and the historical trends that have taken it there.

The unique goals, requirements and limitations of each industry define every step that a data scientist takes. Without understanding the underlying aspects of the industry, it could be impossible to find meaningful insight or make useful recommendations.

A data scientist may be most effective when they truly understand the business they are advising. Though data can provide unique insights, it may not capture the full picture. This requires a data scientist to be aware of the processes and realities at play in their industry. Though they may share a job title, the precise goals and tasks of a data scientist will vary greatly by industry. To be successful, a data scientist should understand the industry that they are working in.

Data-Driven Problem Solving

Data-driven problem solving allows data to inform the entire data science process. By using a structured approach to identify and frame problems, the decision making process could be simplified. In data science, the vast quantity of data and tools creates nearly endless avenues to pursue. Managing these decisions is an essential job for a data scientist. Data science both informs and is informed by the data-driven problem solving process.

A data scientist is likely to know how to productively approach a problem. This means identifying a situation’s salient features, figuring out how to frame a question that will yield the desired answer, deciding what approximations make sense, and consulting the right co-workers at the appropriate junctures of the analytic process. All of that in addition to knowing which data science methods to apply to the problem at hand.

A data scientist’s job is to understand how to take raw data and derive meaning from it. This requires more than just an understanding of advanced statistics and machine learning. They also need to integrate their understanding of the problem domain, available information and their goals when deciding how to proceed.

Data science problems and solutions are never obvious. There are many possible paths to explore, and it is easy to become overwhelmed with the options. A structured approach to data-driven problem solving allows for a data scientist to track and manage progress and outcomes. Structured techniques such as Six SigmaExternal link:open_in_new are great tools to help data scientists and teams solve real world data science problems.

Excerpted from Essential Data Science Skills

Tableau

Tableau is the data analytics tool that companies across the globe have embraced to communicate with data and develop a culture of data-driven decision making.

1 TABLEAU IS EASY

Data can be complicated. Tableau makes it easy. Tableau is a data visualization tool that takes data and presents it in a user-friendly format of charts and graphs.

There is no code writing required. You’ll easily master the end-to-end cycle of data analytics.

2 TABLEAU IS TREMENDOUSLY USEFUL

“Anyone who works in data should learn tools that help tell data stories with quality visual analytics.”

The smart data analyst, data scientist, and data engineer who have quickly adopted and started to use have gained key competitive advantage in the recent data-related hiring frenzy.

Check out the visualizations developed by Tableau users.

TABLEAU DATA ANALYSTS ARE IN DEMAND

As more and more businesses discover the value of data, the demand for analysts is growing. One advantage of Tableau is that it is so visually pleasing and easy for busy executives — and even the tech-averse — to use and understand. Tableau presents complicated and sophisticated data in a simple visualization format. In other words, CEOs love it.

Think of Tableau as your secret weapon. Once you learn it, you can easily surface critical information to stakeholders in a visually compelling format. That

“Tableau helps organizations leverage business intelligence to become more data-driven in their decision-making process.”

Get Started

The Tableau Public desktop app is a great way for you to get started.

Do the following to get started quickly;

- Download and install Tableau Public on your desktop

- Head over to Kaggle Datasets and find a CSV file of your liking and download it

- Open Tableau and read the downloaded CSV File

- Check out the tutorials here on how to use Tableau and build simple visualiztions with it

Run Your Own Tableau Bootcamp

For students who are pursuing Analytics degree programs a great way to conduct a cohort based bootcamp is to adop the following framework. The placement cooridnator or student leaders can adop the framework described below to run the competition. Industry leaders from your aluni group can be invited to judge the best dashboards developed.

-

Students will organize themselves into a maximum of 10 teams.

-

Each team will do the following;

- choose a team name.

- choose a team mascot/logo

- nominate a team manager who will manage the operations of managing the team, setting up their meetings, choosing the final tableau layout, dashboard name and being the team representative.

- lead analyst 1 who will develop the dashboard

- lead analyst 2 who will develop the dashboard

- business analyst who will research the dataset and consult with the team and discuss which dataset to choose for building the dashboard and the final business narrative

- visualization researcher who will survey the relevant public dashboards and discuss with the team on which visualizations to choose for the dashboard

If the team has more than 5 members, the additional members will be nominated as lead analyst 3, lead analyst 4 etc.

The first meeting with with the students will have each team manager present a slide where they will describe their team, the dataset chosen and what they have in mind for the final dashboard.

We will use this session to answer any questions they may have (not technical questions). This session can also be for just the managers to meet with us and discuss their approach and choice of dataset and vision for the final dashboard.

A week later we meet with the students for the final presentations. Each team will be given 10 minutes to go over the dashboard. The manager will present for 5 to 6 minutes. We will ask questions for the last 4 minutes or so of each presentation.

We will use the following to guide our assessments;

Did the team select an interesting story to tell with the data as it related to the topic and audience?

A story should have a clear beginning, middle, and end. Questions are useful to guide the audience with answers as are takeaways that drive the narrative from introduction to conclusion.

Did the team select appropriate visualizations (chart types) to present the data?

Charts and graphs should clearly show the data without bias. The chart type should be familiar to the audience. Avoid overly-complex charts that look fancy but don't clearly show insights.

Did the team apply effective design principles to the charts to clearly present the data?

Charts should be devoid of extraneous non-data elements such as shadows, borders, and use of color for decoration.

Did the team apply effective design principles to the presentation?

Presentation decks should be designed to support the presentation rather than be the presentation. Text should be limited and the use of visuals should be emphasized. All text should be readable (at least 36-point font) for the audience.

Did the group present visualizations that worked together to tell a coherent story?

The visualizations used should progressively reveal the insights or trends. Each visualization should highlight a single and different takeaway.

Students will be asked to upload their presentations into the Tableau Public Gallery.

Following dashboards from various teams were created by students from Delhi Universit Statistics Department in 2021.

Insight Strategists

Team KRASS

Viz-Zards

Pentacle

Abraca-Data

Datatrons

Outliers

Data Demystifiers

Nirvachan

V Stay

SQL

SQL (Structured Query Language) is the main programming language used by database management systems.

We live in a data-driven world. Everything that we do online creates data. Every business transaction creates data: cash register sales, inventory changes, and upcoming shipments. According to Forbes, 90% of the data in the world was created in the last two years. All of this data provides invaluable information for businesses.

Over 97% of business organizations are investing in data.

SQL (Structured Query Language) is a programming language used to manage data in databases. SQL is the second-most popular programming language, and it is used by a majority of all developers. It has been the primary language of digital databases since the 1970s.

SQL is the most common method of accessing the immense amount of essential data stored in databases. Given how valuable this data has become to companies, people who know how to use SQL to access it has a considerable advantage in the job market.

Why learn SQL?

SQL as a job skill is relevant in every industry and one of the foundations of a career in data science: a hot career path Harvard named “the most promising career of the 21st century.” Learning SQL is recommended for anyone who wants to work in either coding or data. Some job positions that require SQL training are SQL Developer, Software Engineer, Data Scientist, Data Analyst, Database Administrator, and Big Data Architect.

Two of the primary uses for SQL include:

- Numerous types of databases, including Microsoft SQL, MySQL, and PostgreSQL. These databases support innumerable companies of various sizes: Microsoft SQL alone is used by over 200,000 companies worldwide.

- SQL can also be found in other types of technology, including iPhones and Androids. For example, SQLite is used on every smartphone, in every browser, on Skype calls, music streaming services, and some televisions.

- Unlike other languages, SQL can be used for non-programming purposes: such as marketers or finance workers querying for data.

With such widespread usage, developers who learn SQL will remain in demand for decades.

There are several reasons why SQL makes it so much easier for workers to learn other programming languages, including:

- SQL’s structure offers a straightforward framework for data analysis

- SQL is flexible and can be optimized by developers to run any query

- SQL language can be adapted and extended with new calculations

- SQL Has a Secure Future

Get Started with SQL

SQL is how you interact with a database. When interacting with a database you connect to it using a client. This client is a software program that you run into which you can issue SQL commands.

Additionally you can also connect to databases via programming languages and issue SQL commands via programming language interfaces.

Head over to this article which showcases how you can use a popular SQL client, DBeaver to connect to a variety of databases such as PostgreSQL

With DBeaver you can also connect to a CSV file and query it as thought it were a database table. Additionally you can also connect to SQLite databases which are embedded database. This only means the entire database functionality is contained within a file.

Business Case Study with SQL

The Android App Store draws millions of users everyday to download apps for their smart phones. Apps belong to various categories like Entertainment, Productivity, Children, Gaming.

Apps have various ratings and reviews.

Imagine being able to analyze this information and draw insights that can help you learn more about the dynamics of this vibrant marketplace.

A three-part series that takes you into this dataset and shows you how you can use SQL to answer interesting business questions.

Android Marketplace Analysis - Part 1

Android Marketplace Analysis - Part 2

Android Marketplace Analysis - Part 3

Python is the #1 programming language for Data Scientists and Machine Learning(DSML) professionals.

Learning and investing your time in Python is necessary if you want to become a DSML professional. The ecosystem around Python is very extensive and the community is very passionate and helpful to new users.

The video below walks through the a report released by Jet Brains where they surveyed the Big Data industry. As you can see, Python continues to be the #1 choice across the industry.

Now that you have decided to learn Python, you will need to learn how to;

- Run and test your Python code quickly

- Visualize data

- Run models and see results

- Develop Python scripts

- Develop Python web applications

- Develop software with Python

- and many more..

To be able to do a variety of tasks you can learn how to use Jupyter for quick prototyping and research. And you will need to learn how to use an Integrated Development Environment (IDE) for more of the Software Development aspects.

PyCharm

Jet Brains makes a very cool Python IDE called PyCharm.

Check it out. The Community edition is free.

VS Code

Another popular IDE is VS Code.

VS Code has certainly captured the imagination of DSML professionals. VS Code is free and is developed by Microsoft.

VS Code isn't specifically used for Python programming. You code code in many programming languages with VS Code. With a very large marketplace of plugins and extensions using VS Code will give you massive abilities as a DSML professional.

Python is a versatile language and is used for a variety of Software Engineering tasks in addition to being the #1 choice in DSML.

Style Guide

As you start writing code you will deal with basic challenges such as naming of variables and functions. How to create comments. You will wonder how to write clean and readable code. Styles guides provide just that. Guidance on how to write clean and readable code. They also create a form of standardization that improves knowledge sharing amongst peers and across the industry.

The Python foundation has published a style guide called PEP 8. You should read PEP 8 after you have read the Google Python style guide.

The one from Google is an easier read.

Remember these style guides are not a one-time read. You have to keep coming back to it and read it.

If you read it only when you are trying to solve the problem of naming variables you will not find an immediate answer. These style guides need to be read at leisure and with a certain regularity. Only during such readings will you "absorb" the suggestions and conventions so that they become part of your natural coding style.

Only then will the style guides work for you.

Kaggle is a great platform that combines access to a variety of building blocks that comprises of a end-to-end Data Science and Machine Learning (DSML).

Datasets

On Kaggle a large collection of datasets are available. These datasets are of different kinds. Tabular data, Images and Text datasets are available for you to analyze and create models from. These Datasets are released by companies and also by data enthusiasts.

Competitions

Competitions on Kaggle is how this platform first gained huge popularity. There are many types of competitions. The ones that have a cash prize are sponsored by large companies who wish to learn from the best Data professionals in the world.

Imagine a company wants to solve a DSML problem. Kaggle gives them a chance to present the problem to the world and have the best DSML professionals and enthusiasts compete with each other for the final prize.

Kaggle has created a very solid talent network for Data professionals. The winners of many of these competitions go on to get top jobs in the DSML industry. Many of the winners are already top professionals and academics in DSML and seeing how they win a competition is the best kind of practical education in DSML world.

Notebooks

Notebooks are the building blocks of all DSML workflows. Students, professors and professionals all use Notebooks during research and also in production.

Notebooks are a very different approach to developing technical solutions. For DSML professionals this is a huge advantage.

In a Notebook you can write code and see the output right there. And you can break up a long problem statement into small sub parts. And for each sub part you can write code and see the results.

With this approach you can build up from zero to the final solution. You can visialize data and results. You can extract output in the form of reports.

And you can also finally put this Notebook into production as the final DSML product.

On Kaggle you get to see how the top DSML professionals have solved problems. Their Notebooks are there for you to see and learn from. This is like working with the top DSML people but from the comfort of your home and country.

Discussions from the top professionals in the industry

Discussions on Kaggle are across many topics. These discussions are a gold mine. In these forums you get to learn from DSML professionals asking very important and critical questions.

These questions are based on data quality, feature engineering, model selection, performance testing of model results and many other critical aspects of DSML work.

The responses to these questions reveals the best practices and alternative approaches that DSML people apply in solving challenging problems. You can very well imagine that having access to such discussions can increase your understanding of DSML by leaps and bounds.

These are evolving discussions so you should keep coming back to the forums to see who how the discussion has changed over time. You are seeing the industry change in real-time. This is what it means to be at the cutting-edge.

Courses

Kaggle Courses are short introductions into all aspects of DSML. You can find introduction to programming, data analysis, machine learning and deep neural network related courses.

Python is used successfully in thousands of real-world business applications around the world, including many large and mission critical systems.

Here are some quotes from happy Python users

Python is fast enough for our site and allows us to produce maintainable features in record times, with a minimum of developers," said Cuong Do, Software Architect, YouTube.com.

Python plays a key role in our production pipeline. Without it a project the size of Star Wars: Episode II would have been very difficult to pull off. From crowd rendering to batch processing to compositing, Python binds all things together," said Tommy Burnette, Senior Technical Director, Industrial Light & Magic.

Python has been an important part of Google since the beginning, and remains so as the system grows and evolves. Today dozens of Google engineers use Python, and we're looking for more people with skills in this language." said Peter Norvig, director of search quality at Google, Inc.

Python usage survey and reports

The PSF (Python Software Foundation) compiled a brochure many years ago to showcase the advantages of using Python. While this brochure is useful as a quick read, there is a lot more happening with Python today.

A more updated version lists a large number of Python usage trends the industry such as;

- Internet of Things

- Machine Learning

- Startups

- Web Development

- Fintech

- Data Science

- Data Engineering

The Jet Brains survey on Python is a great read. Check the video below to see the highlights of this survey.

Learn Python

Learning how to code can take on a variety of approaches. You can learn by reading a book, YouTube videos or blogs. But the most important thing is to practice.

In the section below you will come across a few concepts in Python which can be a great place to start.

Create your first Notebook

We will use Kaggle to create a Notebook where all of our code will be executed.

Data Types

# string

name = "Aryabhatta"

occupation = "Mathematician"

# an integer

age = 23

# float

weight = 64.81

# boolean

is_a_mathematican = True

can_ride_elephant = False

# list

isro_missions = ["GSLV-F10", "PSLV-C51", "PSLV-C50", "PSLV-C49"]

# dictionary

isro_mission_dates = {

'PSLV-C53' : 'Jun 30 2022',

'PSLV-C52' : 'Feb 14 2022',

'GSLV-F10' : 'Aug 12 2021',

'PSLV-C51' : 'Feb 28 2021',

'PSLV-C50' : 'Dec 17 2020'

}

just_the_isro_mission_names = isro_mission_dates.keys() # a list of mission name

just_the_isro_mission_dates = isro_mission_dates.values() # a list of mission dates

# tuple

# can organize the basic variable types in a comma delimited manner

# and retrieve them in that order

pslv_isro_launches = ('PSLV-C53', 'PSLV-F10')

pslv_c, pslv_f = pslv_isro_launches

# objects (classes)

# use objects when you have a lot of things with similiar data structure and actions

class SatelliteLaunch(object):

# __init__ automatically called when creating an object

def __init__(self, name = None):

print(f'Creating new launcher for {name}')

self.name = name

def set_name(self, name):

self.name = name

launcher = SatelliteLaunch("Chandrayan")

launcher.set_name("Chandrayan 2")

launcher.payload = "Pragyan Rover" # dynamically set object property/variable

print(f'{launcher.name} is carrying the payload of {launcher.payload}')

print("This variable is :", type(launcher))

print("Hello World!")

print("Python is the #1 programming language in the world")

temperature = 23

print(f"It is {temperature} degree Celsius in Bangalore today")

Control Flows

isro_location = 'blr'

if isro_location == 'blr':

print("You got the location right")

else:

print("You got the location wrong")

List Comprehensions

#List comprehensions is a pythonic way of expressing a ‘for-loop’.

result = []

for i in range(10):

if i%2 == 0:

result.append(i)

print(result)

# this is a list comprehension. The above is not.

[i for i in range(10) if i % 2 == 0]

[i**2 for i in range(10)]

Continue Learning

FreeCodeCamp has great tutorials and you can learn Python from one of their YouTube videos.

Introduction to Data Analysis with Pandas

pandas is a fast, powerful, flexible and easy to use open source data analysis and manipulation tool, built on top of the Python programming language.

History of development

In 2008, pandas development began at AQR Capital Management. By the end of 2009 it had been open sourced, and is actively supported today by a community of like-minded individuals around the world who contribute their valuable time and energy to help make open source pandas possible.

Library Highlights

-

A fast and efficient DataFrame object for data manipulation with integrated indexing;

-

Tools for reading and writing data between in-memory data structures and different formats:

- CSV and text files

- Microsoft Excel

- SQL databases

- The fast HDF5 format

-

Intelligent data alignment and integrated handling of missing data: gain automatic label-based alignment in computations and easily manipulate messy data into an orderly form;

-

Flexible reshaping and pivoting of data sets;

-

Intelligent label-based slicing, fancy indexing, and subsetting of large data sets;

-

Columns can be inserted and deleted from data structures for size mutability;

-

Aggregating or transforming data with a powerful group by engine allowing split-apply-combine operations on data sets;

-

High performance merging and joining of data sets;

-

Hierarchical axis indexing provides an intuitive way of working with high-dimensional data in a lower-dimensional data structure;

-

Time series-functionality: date range generation and frequency conversion, moving window statistics, date shifting and lagging. Even create domain-specific time offsets and join time series without losing data;

-

Highly optimized for performance, with critical code paths written in Cython or C.

-

Python with pandas is in use in a wide variety of academic and commercial domains, including Finance, Neuroscience, Economics, Statistics, Advertising, Web Analytics, and more.

Mission

pandas aims to be the fundamental high-level building block for doing practical, real world data analysis in Python. Additionally, it has the broader goal of becoming the most powerful and flexible open source data analysis / manipulation tool available in any language.

Vision

A world where data analytics and manipulation software is:

- Accessible to everyone

- Free for users to use and modify

- Flexible

- Powerful

- Easy to use

- Fast

Values

Is in the core of pandas to be respectful and welcoming with everybody, users, contributors and the broader community. Regardless of level of experience, gender, gender identity and expression, sexual orientation, disability, personal appearance, body size, race, ethnicity, age, religion, or nationality.

Quick Pandas Tour

This is a short introduction to pandas, geared mainly for new users. Taken from 10 min pandas guide

import numpy as np

import pandas as pd

Object creation

Creating a Series by passing a list of values, letting pandas create a default integer index:

s = pd.Series([1, 3, 5, np.nan, 6, 8])

s

Out[4]:

0 1.0

1 3.0

2 5.0

3 NaN

4 6.0

5 8.0

dtype: float64

Creating a DataFrame by passing a NumPy array, with a datetime index and labeled columns:

dates = pd.date_range("20130101", periods=6)

dates

Out[6]:

DatetimeIndex(['2013-01-01', '2013-01-02', '2013-01-03', '2013-01-04',

'2013-01-05', '2013-01-06'],

dtype='datetime64[ns]', freq='D')

df = pd.DataFrame(np.random.randn(6, 4), index=dates, columns=list("ABCD"))

df

Out[8]:

A B C D

2013-01-01 0.469112 -0.282863 -1.509059 -1.135632

2013-01-02 1.212112 -0.173215 0.119209 -1.044236

2013-01-03 -0.861849 -2.104569 -0.494929 1.071804

2013-01-04 0.721555 -0.706771 -1.039575 0.271860

2013-01-05 -0.424972 0.567020 0.276232 -1.087401

2013-01-06 -0.673690 0.113648 -1.478427 0.524988

Creating a DataFrame by passing a dictionary of objects that can be converted into a series-like structure:

df2 = pd.DataFrame(

{

"A": 1.0,

"B": pd.Timestamp("20130102"),

"C": pd.Series(1, index=list(range(4)), dtype="float32"),

"D": np.array([3] * 4, dtype="int32"),

"E": pd.Categorical(["test", "train", "test", "train"]),

"F": "foo",

}

)

df2

Out[10]:

A B C D E F

0 1.0 2013-01-02 1.0 3 test foo

1 1.0 2013-01-02 1.0 3 train foo

2 1.0 2013-01-02 1.0 3 test foo

3 1.0 2013-01-02 1.0 3 train foo

The columns of the resulting DataFrame have different dtypes:

df2.dtypes

Out[11]:

A float64

B datetime64[ns]

C float32

D int32

E category

F object

dtype: object

Viewing data

# Here is how to view the top and bottom rows of the frame:

df.head()

Out[13]:

A B C D

2013-01-01 0.469112 -0.282863 -1.509059 -1.135632

2013-01-02 1.212112 -0.173215 0.119209 -1.044236

2013-01-03 -0.861849 -2.104569 -0.494929 1.071804

2013-01-04 0.721555 -0.706771 -1.039575 0.271860

2013-01-05 -0.424972 0.567020 0.276232 -1.087401

df.tail(3)

Out[14]:

A B C D

2013-01-04 0.721555 -0.706771 -1.039575 0.271860

2013-01-05 -0.424972 0.567020 0.276232 -1.087401

2013-01-06 -0.673690 0.113648 -1.478427 0.524988

Display the index, columns:

df.index

Out[15]:

DatetimeIndex(['2013-01-01', '2013-01-02', '2013-01-03', '2013-01-04',

'2013-01-05', '2013-01-06'],

dtype='datetime64[ns]', freq='D')

df.columns

Out[16]: Index(['A', 'B', 'C', 'D'], dtype='object')

# describe() shows a quick statistic summary of your data:

df.describe()

Out[19]:

A B C D

count 6.000000 6.000000 6.000000 6.000000

mean 0.073711 -0.431125 -0.687758 -0.233103

std 0.843157 0.922818 0.779887 0.973118

min -0.861849 -2.104569 -1.509059 -1.135632

25% -0.611510 -0.600794 -1.368714 -1.076610

50% 0.022070 -0.228039 -0.767252 -0.386188

75% 0.658444 0.041933 -0.034326 0.461706

max 1.212112 0.567020 0.276232 1.071804

# Transposing your data:

df.T

Out[20]:

2013-01-01 2013-01-02 2013-01-03 2013-01-04 2013-01-05 2013-01-06

A 0.469112 1.212112 -0.861849 0.721555 -0.424972 -0.673690

B -0.282863 -0.173215 -2.104569 -0.706771 0.567020 0.113648

C -1.509059 0.119209 -0.494929 -1.039575 0.276232 -1.478427

D -1.135632 -1.044236 1.071804 0.271860 -1.087401 0.524988

Sorting by an axis:

df.sort_index(axis=1, ascending=False)

Out[21]:

D C B A

2013-01-01 -1.135632 -1.509059 -0.282863 0.469112

2013-01-02 -1.044236 0.119209 -0.173215 1.212112

2013-01-03 1.071804 -0.494929 -2.104569 -0.861849

2013-01-04 0.271860 -1.039575 -0.706771 0.721555

2013-01-05 -1.087401 0.276232 0.567020 -0.424972

2013-01-06 0.524988 -1.478427 0.113648 -0.673690

Sorting by values:

df.sort_values(by="B")

Out[22]:

A B C D

2013-01-03 -0.861849 -2.104569 -0.494929 1.071804

2013-01-04 0.721555 -0.706771 -1.039575 0.271860

2013-01-01 0.469112 -0.282863 -1.509059 -1.135632

2013-01-02 1.212112 -0.173215 0.119209 -1.044236

2013-01-06 -0.673690 0.113648 -1.478427 0.524988

2013-01-05 -0.424972 0.567020 0.276232 -1.087401

Selection

While standard Python / NumPy expressions for selecting and setting are intuitive and come in handy for interactive work, for production code, it is recommended the optimized pandas data access methods, .at, .iat, .loc and .iloc.

Selecting a single column, which yields a Series, equivalent to df.A:

df["A"]

Out[23]:

2013-01-01 0.469112

2013-01-02 1.212112

2013-01-03 -0.861849

2013-01-04 0.721555

2013-01-05 -0.424972

2013-01-06 -0.673690

Freq: D, Name: A, dtype: float64

Selecting via [], which slices the rows:

df[0:3]

Out[24]:

A B C D

2013-01-01 0.469112 -0.282863 -1.509059 -1.135632

2013-01-02 1.212112 -0.173215 0.119209 -1.044236

2013-01-03 -0.861849 -2.104569 -0.494929 1.071804

df["20130102":"20130104"]

Out[25]:

A B C D

2013-01-02 1.212112 -0.173215 0.119209 -1.044236

2013-01-03 -0.861849 -2.104569 -0.494929 1.071804

2013-01-04 0.721555 -0.706771 -1.039575 0.271860

# Selection by label

df.loc[dates[0]]

Out[26]:

A 0.469112

B -0.282863

C -1.509059

D -1.135632

Name: 2013-01-01 00:00:00, dtype: float64

Selecting on a multi-axis by label:

df.loc[:, ["A", "B"]]

Out[27]:

A B

2013-01-01 0.469112 -0.282863

2013-01-02 1.212112 -0.173215

2013-01-03 -0.861849 -2.104569

2013-01-04 0.721555 -0.706771

2013-01-05 -0.424972 0.567020

2013-01-06 -0.673690 0.113648

Showing label slicing, both endpoints are included:

df.loc["20130102":"20130104", ["A", "B"]]

Out[28]:

A B

2013-01-02 1.212112 -0.173215

2013-01-03 -0.861849 -2.104569

2013-01-04 0.721555 -0.706771

# Reduction in the dimensions of the returned object:

df.loc["20130102", ["A", "B"]]

Out[29]:

A 1.212112

B -0.173215

Name: 2013-01-02 00:00:00, dtype: float64

For getting a scalar value:

df.loc[dates[0], "A"]

Out[30]: 0.4691122999071863

# For getting fast access to a scalar (equivalent to the prior method):

df.at[dates[0], "A"]

Out[31]: 0.4691122999071863

# Select via the position of the passed integers:

df.iloc[3]

Out[32]:

A 0.721555

B -0.706771

C -1.039575

D 0.271860

Name: 2013-01-04 00:00:00, dtype: float64

# By integer slices, acting similar to NumPy/Python:

df.iloc[3:5, 0:2]

Out[33]:

A B

2013-01-04 0.721555 -0.706771

2013-01-05 -0.424972 0.567020

# By lists of integer position locations, similar to the NumPy/Python style:

df.iloc[[1, 2, 4], [0, 2]]

Out[34]:

A C

2013-01-02 1.212112 0.119209

2013-01-03 -0.861849 -0.494929

2013-01-05 -0.424972 0.276232

# For slicing rows explicitly:

df.iloc[1:3, :]

Out[35]:

A B C D

2013-01-02 1.212112 -0.173215 0.119209 -1.044236

2013-01-03 -0.861849 -2.104569 -0.494929 1.071804

#For slicing columns explicitly:

df.iloc[:, 1:3]

Out[36]:

B C

2013-01-01 -0.282863 -1.509059

2013-01-02 -0.173215 0.119209

2013-01-03 -2.104569 -0.494929

2013-01-04 -0.706771 -1.039575

2013-01-05 0.567020 0.276232

2013-01-06 0.113648 -1.478427

# For getting a value explicitly:

df.iloc[1, 1]

Out[37]: -0.17321464905330858

# For getting fast access to a scalar (equivalent to the prior method):

df.iat[1, 1]

Out[38]: -0.17321464905330858

# Boolean indexing

Using a single column’s values to select data:

df[df["A"] > 0]

Out[39]:

A B C D

2013-01-01 0.469112 -0.282863 -1.509059 -1.135632

2013-01-02 1.212112 -0.173215 0.119209 -1.044236

2013-01-04 0.721555 -0.706771 -1.039575 0.271860

#Selecting values from a DataFrame where a boolean condition is met:

df[df > 0]

Out[40]:

A B C D

2013-01-01 0.469112 NaN NaN NaN

2013-01-02 1.212112 NaN 0.119209 NaN

2013-01-03 NaN NaN NaN 1.071804

2013-01-04 0.721555 NaN NaN 0.271860

2013-01-05 NaN 0.567020 0.276232 NaN

2013-01-06 NaN 0.113648 NaN 0.524988

# Using the isin() method for filtering:

df2 = df.copy()

df2["E"] = ["one", "one", "two", "three", "four", "three"]

df2

Out[43]:

A B C D E

2013-01-01 0.469112 -0.282863 -1.509059 -1.135632 one

2013-01-02 1.212112 -0.173215 0.119209 -1.044236 one

2013-01-03 -0.861849 -2.104569 -0.494929 1.071804 two

2013-01-04 0.721555 -0.706771 -1.039575 0.271860 three

2013-01-05 -0.424972 0.567020 0.276232 -1.087401 four

2013-01-06 -0.673690 0.113648 -1.478427 0.524988 three

df2[df2["E"].isin(["two", "four"])]

Out[44]:

A B C D E

2013-01-03 -0.861849 -2.104569 -0.494929 1.071804 two

2013-01-05 -0.424972 0.567020 0.276232 -1.087401 four

Setting

Setting a new column automatically aligns the data by the indexes:

s1 = pd.Series([1, 2, 3, 4, 5, 6], index=pd.date_range("20130102", periods=6))

s1

Out[46]:

2013-01-02 1

2013-01-03 2

2013-01-04 3

2013-01-05 4

2013-01-06 5

2013-01-07 6

Freq: D, dtype: int64

df["F"] = s1

# Setting values by label:

df.at[dates[0], "A"] = 0

# Setting values by position:

df.iat[0, 1] = 0

# Setting by assigning with a NumPy array:

df.loc[:, "D"] = np.array([5] * len(df))

# The result of the prior setting operations:

df

Out[51]:

A B C D F

2013-01-01 0.000000 0.000000 -1.509059 5 NaN

2013-01-02 1.212112 -0.173215 0.119209 5 1.0

2013-01-03 -0.861849 -2.104569 -0.494929 5 2.0

2013-01-04 0.721555 -0.706771 -1.039575 5 3.0

2013-01-05 -0.424972 0.567020 0.276232 5 4.0

2013-01-06 -0.673690 0.113648 -1.478427 5 5.0

# A where operation with setting:

df2 = df.copy()

df2[df2 > 0] = -df2

df2

Out[54]:

A B C D F

2013-01-01 0.000000 0.000000 -1.509059 -5 NaN

2013-01-02 -1.212112 -0.173215 -0.119209 -5 -1.0

2013-01-03 -0.861849 -2.104569 -0.494929 -5 -2.0

2013-01-04 -0.721555 -0.706771 -1.039575 -5 -3.0

2013-01-05 -0.424972 -0.567020 -0.276232 -5 -4.0

2013-01-06 -0.673690 -0.113648 -1.478427 -5 -5.0

Missing data

pandas primarily uses the value np.nan to represent missing data. It is by default not included in computations.

# Reindexing allows you to change/add/delete the index on a specified axis. This returns a copy of the data:

df1 = df.reindex(index=dates[0:4], columns=list(df.columns) + ["E"])

df1.loc[dates[0] : dates[1], "E"] = 1

df1

Out[57]:

A B C D F E

2013-01-01 0.000000 0.000000 -1.509059 5 NaN 1.0

2013-01-02 1.212112 -0.173215 0.119209 5 1.0 1.0

2013-01-03 -0.861849 -2.104569 -0.494929 5 2.0 NaN

2013-01-04 0.721555 -0.706771 -1.039575 5 3.0 NaN

# To drop any rows that have missing data:

df1.dropna(how="any")

Out[58]:

A B C D F E

2013-01-02 1.212112 -0.173215 0.119209 5 1.0 1.0

Filling missing data:

df1.fillna(value=5)

Out[59]:

A B C D F E

2013-01-01 0.000000 0.000000 -1.509059 5 5.0 1.0

2013-01-02 1.212112 -0.173215 0.119209 5 1.0 1.0

2013-01-03 -0.861849 -2.104569 -0.494929 5 2.0 5.0

2013-01-04 0.721555 -0.706771 -1.039575 5 3.0 5.0

# To get the boolean mask where values are nan:

pd.isna(df1)

Out[60]:

A B C D F E

2013-01-01 False False False False True False

2013-01-02 False False False False False False

2013-01-03 False False False False False True

2013-01-04 False False False False False True

Stats

Operations in general exclude missing data.

# Performing a descriptive statistic:

df.mean()

Out[61]:

A -0.004474

B -0.383981

C -0.687758

D 5.000000

F 3.000000

dtype: float64

# Same operation on the other axis:

df.mean(1)

Out[62]:

2013-01-01 0.872735

2013-01-02 1.431621

2013-01-03 0.707731

2013-01-04 1.395042

2013-01-05 1.883656

2013-01-06 1.592306

Freq: D, dtype: float64

Apply

Applying functions to the data:

df.apply(np.cumsum)

Out[66]:

A B C D F

2013-01-01 0.000000 0.000000 -1.509059 5 NaN

2013-01-02 1.212112 -0.173215 -1.389850 10 1.0

2013-01-03 0.350263 -2.277784 -1.884779 15 3.0

2013-01-04 1.071818 -2.984555 -2.924354 20 6.0

2013-01-05 0.646846 -2.417535 -2.648122 25 10.0

2013-01-06 -0.026844 -2.303886 -4.126549 30 15.0

df.apply(lambda x: x.max() - x.min())

Out[67]:

A 2.073961

B 2.671590

C 1.785291

D 0.000000

F 4.000000

dtype: float64

# Histogramming

s = pd.Series(np.random.randint(0, 7, size=10))

s

Out[69]:

0 4

1 2

2 1

3 2

4 6

5 4

6 4

7 6

8 4

9 4

dtype: int64

s.value_counts()

Out[70]:

4 5

2 2

6 2

1 1

dtype: int64

String Methods

Series is equipped with a set of string processing methods in the str attribute that make it easy to operate on each element of the array, as in the code snippet below. Note that pattern-matching in str generally uses regular expressions by default (and in some cases always uses them).

s = pd.Series(["A", "B", "C", "Aaba", "Baca", np.nan, "CABA", "dog", "cat"])

s.str.lower()

Out[72]:

0 a

1 b

2 c

3 aaba

4 baca

5 NaN

6 caba

7 dog

8 cat

dtype: object

Concat

Concatenating pandas objects together with concat():

df = pd.DataFrame(np.random.randn(10, 4))

df

Out[74]:

0 1 2 3

0 -0.548702 1.467327 -1.015962 -0.483075

1 1.637550 -1.217659 -0.291519 -1.745505

2 -0.263952 0.991460 -0.919069 0.266046

3 -0.709661 1.669052 1.037882 -1.705775

4 -0.919854 -0.042379 1.247642 -0.009920

5 0.290213 0.495767 0.362949 1.548106

6 -1.131345 -0.089329 0.337863 -0.945867

7 -0.932132 1.956030 0.017587 -0.016692

8 -0.575247 0.254161 -1.143704 0.215897

9 1.193555 -0.077118 -0.408530 -0.862495

# break it into pieces

pieces = [df[:3], df[3:7], df[7:]]

pd.concat(pieces)

Out[76]:

0 1 2 3

0 -0.548702 1.467327 -1.015962 -0.483075

1 1.637550 -1.217659 -0.291519 -1.745505

2 -0.263952 0.991460 -0.919069 0.266046

3 -0.709661 1.669052 1.037882 -1.705775

4 -0.919854 -0.042379 1.247642 -0.009920

5 0.290213 0.495767 0.362949 1.548106

6 -1.131345 -0.089329 0.337863 -0.945867

7 -0.932132 1.956030 0.017587 -0.016692

8 -0.575247 0.254161 -1.143704 0.215897

9 1.193555 -0.077118 -0.408530 -0.862495

Join

# SQL style merges.

left = pd.DataFrame({"key": ["foo", "foo"], "lval": [1, 2]})

right = pd.DataFrame({"key": ["foo", "foo"], "rval": [4, 5]})

left

Out[79]:

key lval

0 foo 1

1 foo 2

right

Out[80]:

key rval

0 foo 4

1 foo 5

pd.merge(left, right, on="key")

Out[81]:

key lval rval

0 foo 1 4

1 foo 1 5

2 foo 2 4

3 foo 2 5

# Another example that can be given is:

left = pd.DataFrame({"key": ["foo", "bar"], "lval": [1, 2]})

right = pd.DataFrame({"key": ["foo", "bar"], "rval": [4, 5]})

left

Out[84]:

key lval

0 foo 1

1 bar 2

right

Out[85]:

key rval

0 foo 4

1 bar 5

pd.merge(left, right, on="key")

Out[86]:

key lval rval

0 foo 1 4

1 bar 2 5

Grouping

By “group by” we are referring to a process involving one or more of the following steps:

-

Splitting the data into groups based on some criteria

-

Applying a function to each group independently

-

Combining the results into a data structure

df = pd.DataFrame(

{

"A": ["foo", "bar", "foo", "bar", "foo", "bar", "foo", "foo"],

"B": ["one", "one", "two", "three", "two", "two", "one", "three"],

"C": np.random.randn(8),

"D": np.random.randn(8),

}

)

df

Out[88]:

A B C D

0 foo one 1.346061 -1.577585

1 bar one 1.511763 0.396823

2 foo two 1.627081 -0.105381

3 bar three -0.990582 -0.532532

4 foo two -0.441652 1.453749

5 bar two 1.211526 1.208843

6 foo one 0.268520 -0.080952

7 foo three 0.024580 -0.264610

#Grouping and then applying the sum() function to the resulting groups:

df.groupby("A").sum()

Out[89]:

C D

A

bar 1.732707 1.073134

foo 2.824590 -0.574779

# Grouping by multiple columns forms a hierarchical index, and again we can apply the sum() function:

df.groupby(["A", "B"]).sum()

Out[90]:

C D

A B

bar one 1.511763 0.396823

three -0.990582 -0.532532

two 1.211526 1.208843

foo one 1.614581 -1.658537

three 0.024580 -0.264610

two 1.185429 1.348368

Plotting

# We use the standard convention for referencing the matplotlib API:

import matplotlib.pyplot as plt

plt.close("all")

# The close() method is used to close a figure window:

ts = pd.Series(np.random.randn(1000), index=pd.date_range("1/1/2000", periods=1000))

ts = ts.cumsum()

ts.plot()

# If running under Jupyter Notebook, the plot will appear on plot(). Otherwise use matplotlib.pyplot.show to show it or matplotlib.pyplot.savefig to write it to a file.

plt.show();

# On a DataFrame, the plot() method is a convenience to plot all of the columns with labels:

df = pd.DataFrame(

np.random.randn(1000, 4), index=ts.index, columns=["A", "B", "C", "D"]

)

df = df.cumsum()

plt.figure()

df.plot()

plt.legend(loc='best');

Lets Dive In

Head over to this Kaggle Notebook and clone this notebook.

Additional Practice with Pandas

Spotify Data Analysis with Pandas

Pandas Stackoverflow Questions

Pandas Tutorials on real-world datasets

Plotting with Python

A great practice notebook to learn Plotly can be found here.

Introduction to Machine Learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks.[1] It is seen as a part of artificial intelligence. Machine learning algorithms build a model based on sample data, known as training data, in order to make predictions or decisions without being explicitly programmed to do so.

Machine learning algorithms are used in a wide variety of applications, such as in medicine, email filtering, speech recognition, and computer vision, where it is difficult or unfeasible to develop conventional algorithms to perform the needed tasks.

Glossary

Machine Learning is an extensive area of study and as you continue down this path it is essential you have access to a glossary of terms you will routinely encounter.

Google's Machine Learning Glossary is a great resource to keep at hand.

Recommended ML Courses

There are many courses available online to learn Machine Learning.

FastAI

Machine Learning FastAI course.

There are around 24 hours of lessons, and you should plan to spend around 8 hours a week for 12 weeks to complete the material. The course is based on lessons recorded at the University of San Francisco for the Masters of Science in Data Science program. We assume that you have at least one year of coding experience, and either remember what you learned in high school math, or are prepared to do some independent study to refresh your knowledge.

NPTEL

India's NPTEL (National Programme on Technology Enhanced Learning), is a joint venture of the IITs and IISc, funded by the Ministry of Education (MoE) Government of India, and was launched in 2003. Initially started as a project to take quality education to all corners of the country, NPTEL now offers close to 600+ courses for certification every semester in about 22 disciplines.

Ebook

An Introduction to Statistical Learning

As the scale and scope of data collection continue to increase across virtually all fields, statistical learning has become a critical toolkit for anyone who wishes to understand data. An Introduction to Statistical Learning provides a broad and less technical treatment of key topics in statistical learning. Each chapter includes an R lab. This book is appropriate for anyone who wishes to use contemporary tools for data analysis.

The First Edition topics include:

- Sparse methods for classification and regression

- Decision trees

- Boosting

- Support vector machines

- Clustering

The Second Edition adds:

- Deep learning

- Survival analysis

- Multiple testing

- Naive Bayes and generalized linear models

- Bayesian additive regression trees

- Matrix completion

Tips for Beginners

Set concrete goals or deadlines.

Machine learning is a rich field that's expanding every year. It can be easy to go down rabbit holes. Set concrete goals for yourself and keep moving.

Walk before you run.

You might be tempted to jump into some of the newest, cutting edge sub-fields in machine learning such as deep learning or NLP. Try to stay focused on the core concepts at the start. These advanced topics will be much easier to understand once you've mastered the core skills.

Alternate between practice and theory.

Practice and theory go hand-in-hand. You won't be able to master theory without applying it, yet you won't know what to do without the theory.

Write a few algorithms from scratch.

Once you've had some practice applying algorithms from existing packages, you'll want to write a few from scratch. This will take your understanding to the next level and allow you to customize them in the future.

Seek different perspectives.

The way a statistician explains an algorithm will be different from the way a computer scientist explains it. Seek different explanations of the same topic.

Tie each algorithm to value.

For each tool or algorithm you learn, try to think of ways it could be applied in business or technology. This is essential for learning how to "think" like a data scientist.

Don't believe the hype.

Machine learning is not what the movies portray as artificial intelligence. It's a powerful tool, but you should approach problems with rationality and an open mind. ML should just be one tool in your arsenal!

Ignore the show-offs.

Sometimes you'll see people online debating with lots of math and jargon. If you don't understand it, don't be discouraged. What matters is: Can you use ML to add value in some way? And the answer is yes, you absolutely can.

Think "inputs/outputs" and ask "why."

At times, you might find yourself lost in the weeds. When in doubt, take a step back and think about how data inputs and outputs piece together. Ask "why" at each part of the process.

Find fun projects that interest you!

Rome wasn't built in a day, and neither will your machine learning skills be. Pick topics that interest you, take your time, and have fun along the way.

Excerpted from Elite Data Science

Survey of Machine Learning

One of the leaders in the field of Machine Learning, Sebastian Raschka has written a very extensive survey of the state of Python in Machine Learning.

This survey paper is recommended for graduates and professionals who wish to equip themselves quickly with an overview of various aspects of Machine Learning.

Introduction to scikit-learn

“I literally owe my career in the data space to scikit-learn. It’s not just a framework but a school of thought regarding predictive modeling. Super well deserved, folks :) “ Maykon Schots from Brasil

scikit-learn is;

- Simple and efficient tools for predictive data analysis

- Accessible to everybody, and reusable in various contexts

- Built on NumPy, SciPy, and matplotlib

- Open source, commercially usable - BSD license

scikit-learn is the most popular Python library for Machine Learning.

from sklearn.ensemble import RandomForestClassifier

clf = RandomForestClassifier(random_state=0)

X = [[ 1, 2, 3], # 2 samples, 3 features

[11, 12, 13]]

y = [0, 1] # classes of each sample

clf.fit(X, y)

In the few lines of code you see above, we have done a lot of work.

scikit-learn allows you to apply a large number of ML techniques. All of these techniques can be applied through a common interface that looks much like the above code snippet.

The samples matrix (or design matrix) X whose size is typically (n_samples, n_features).

The target values y which are real numbers for regression tasks, or integers for classification (or any other discrete set of values).

For unsupervized learning tasks, y does not need to be specified.

Once the estimator (Random Forest in the code snippet above) is fitted, it can be used for predicting target values of new data.

Lets dive in with this Notebook to develop an end-to-end ML model with scikit-learn.

Introduction to Deep Learning

Coming Soon..

How to Keep Learning

We live in a time where knowledge sources are abundant and their access is free and easy. This allows millions of people across to learn and acquire skills and knowledge very easily at low cost.

We are also presented with a lot of choices adding to our confusion.

I have found the following sources of knowledge to be reliable.

Github

Online Lectures

Research Papers

YouTube

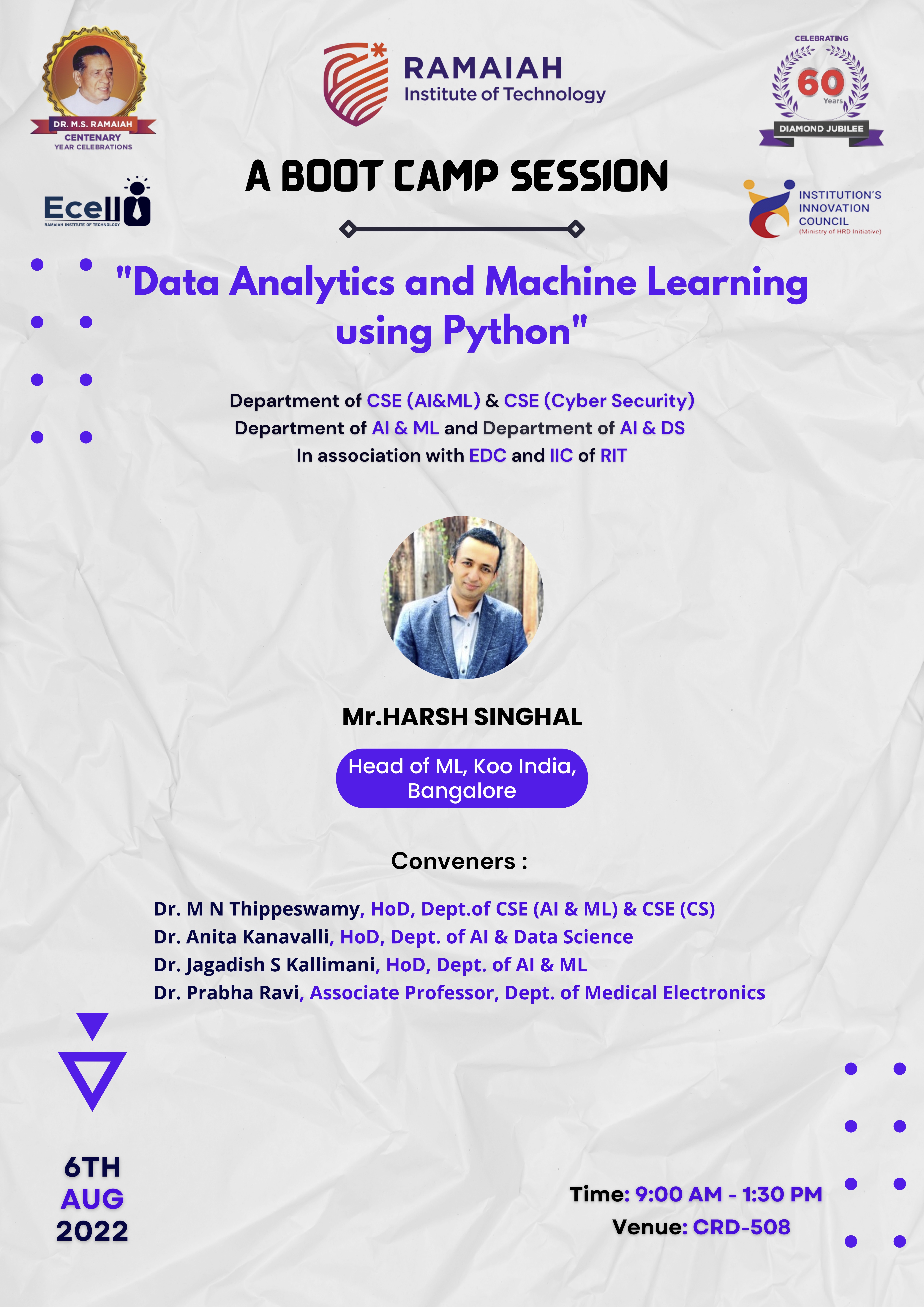

About Harsh Singhal

Harsh Singhal is a global Data Science and Machine Learning (DSML) leader.

Over the last decade Harsh has developed high-impact Machine Learning solutions at companies like LinkedIn and Netflix.

After having spent a decade in California, Harsh decided to move to India and contribute to India's DSML ecosystem.

Harsh is the head of Machine Learning at Koo, Indias #1 social media platform connecting millions of people and allowing them to express themselves in their mother tongue.

Harsh actively works with student communities and guides them to excel in their journey towards DSML excellence. Harsh is also involved as an advisor in developing DSML curricula at academic institutions to increase AI talent density amongst India's student community.